Published by

Liverpool Biennial in partnership with DATA browser series.

ISSN: 2399-9675

Editor:

Joasia Krysa, Manuela Moscoso

Editorial Assistant:

Abi Mitchell

Copyeditor:

Melissa Larner

Web Design:

Mark El-Khatib

Cover Design:

Manuela Moscoso (artwork), Joasia Krysa (words), Helena Geilinger (graphic design)

Creative AI Lab: The Back-End Environments of Art-Making

Eva Jäger

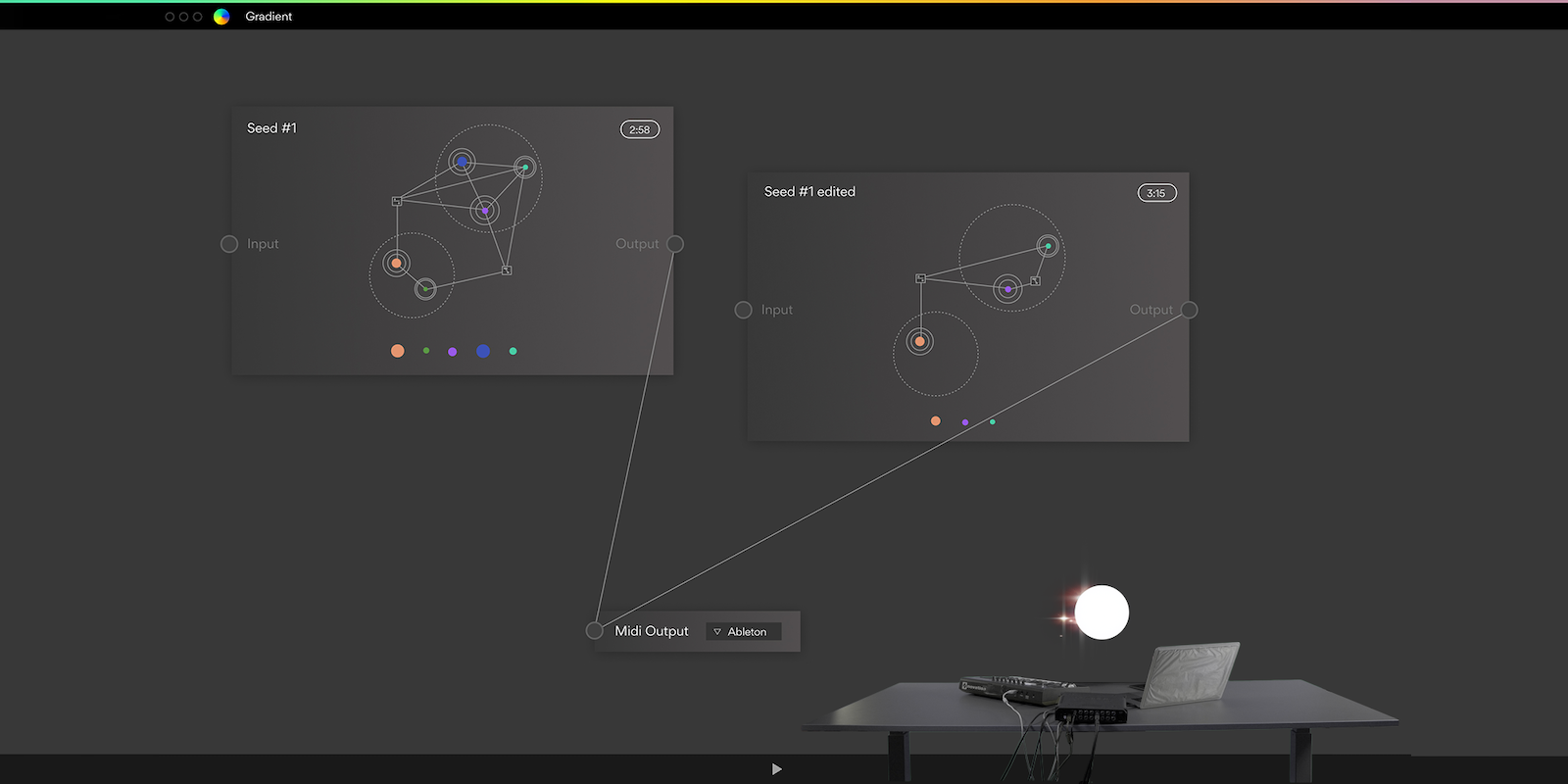

Still from forthcoming ML/AI Interfaces Tutorial Series, 2020. Image courtesy of Trust, Berlin and Ricardo Saavedra

Still from forthcoming ML/AI Interfaces Tutorial Series, 2020. Image courtesy of Trust, Berlin and Ricardo Saavedra

1. The Back-end Environments of Art-Making

The Creative AI Lab is a collaboration between the R&D Platform at Serpentine Galleries and King’s College London’s Department of Digital Humanities. The Lab follows the premise that currently we are at the early stages of understanding the aesthetics and semiotics of ‘artificial intelligence’ (AI). We also approach AI as a framework that holds together a number of disciplines, technologies and systems (creative, cultural and computational). Historically, the themes contained within AI discourse, such as interfaces, automation, data analysis, algorithmic bias, intelligence, alien logics, etc., have featured as cornerstones of various hyped technologies including robotics and virtual reality and machine learning. Today, AI serves as the wrapper via which we engage with these fundamental concepts of digital culture.

From 2016–20, Serpentine has commissioned and overseen the production of a number of artworks where AI technologies are used as a technical medium as well as a conceptual reference or narrative cue. The Lab, which formed in 2019 and officially launched in July 2020, necessarily grew out of a need to explore the experimentation and production phases of these complex projects as creative and research outputs in their own right. By focusing on the production or ‘back-end’ environments of this type of art-making, we have been able to investigate the truly novel ways in which artists are remaking interfaces, building datasets and generally reaching into the grey-box of AI technologies. [1]

Importantly, this emphasis on the back-end has led us to insist that the Lab has no mandate to commission or showcase front-end artworks. Instead, the Creative AI Lab holds space for conversations, research and hands-on experimentation that addresses the technical frameworks of AI and their impacts on art-making, and conversely, the possible impacts on AI research and development of art-making that deploys AI. [2]

There are a couple of reasons to insist on an exploratory creative R&D format within an art-institutional setting. Firstly, constructing an organisation within the organisation we can unbind from front-end formats such as exhibitions or commissions. Instead, we can follow in the steps of an underrepresented working method within humanities research and museums’ output. [3] Secondly, we can provide a necessary supplement to the generic approach to AI that the art-institutional discourse has thus far offered in interpreting the front-end of artworks made using AI technologies. [4] To this extent, our mission is to develop a critical literacy that might help art institutions approach AI as a nuanced medium in art-making. Without this, we will continue to reproduce narratives where art is an antidote to technology rather than a valuable part of its development.

Cultural producers of all kinds should be involved in forming the cultural meaning of AI technologies. And since we cannot separate the cultural meaning of a technology from the technological object itself (for instance, the machine-learning model), [5] it seems that we must go through the back-end.

2. Making Meaning

(What follows is an example of this approach that also forms the basis for our next investigation at the Lab)

At a recent talk, Mercedes Bunz, Principal Investigator of the Lab and Senior Lecturer in the Department of Digital Humanities, King’s College London, reiterated that if the arts and humanities distance themselves from nitty-gritty technology through siloed critique they will become irrelevant. [6] Instead, she and the Lab work closely with computer scientists as they begin to pivot toward self-critique. Bunz offered some insights to understanding AI technic from the arts-and-humanities perspective – through semiotic studies – that remain under-utilised in computer science.

Most notable is the concept of meaning-making described by Stuart Hall, among others, as a process of both encoding and decoding. [7] It is a process, Bunz argues, that has now been taken up by AI, through deep learning. Understanding contemporary AI as having the capacity to make meaning is crucial if we follow Hall’s logic (as Bunz does in a recent paper on the subject) because then meaning can also be made by calculation – a task to which AI is regularly assigned. [8] This proposes a paradigm shift: the core work of culture, the making of meaning, can now also be made (processed, analysed, calculated) by AI – by the technology itself.

While this is only one specific example (where we admittedly also need to argue that semiotics is what art and culture bring to the table, so to speak), the point is that it confirms that the conceptual meaning of works made with AI technologies is inseparable from its technical meaning. And it can only really be understood by engaging with the technicalities (in the back-end) in a serious way.

As we set out on this investigation and others, we remember to embrace the brittleness of our systems and their specific intelligences. Hopefully, this will bring with it divergent understandings of art-making, artworks and art ecosystems. Perhaps this can give way to an approach that replaces autonomous agents (human subjects) with collaborative coalitions (human and non-human subjects). Perhaps these collaborative coalitions will also produce new meaning.

***

The Lab’s first initiative since launching in July 2020 has been the formation of a database of creative AI tools and resources, which is now embedded in the Stages site, here. This database is a growing collection of research commissioned & collected by the Creative AI Lab. The latest tools were selected by Luba Elliott. Check back for new entries. We hope that you will explore (and propose additions to it). Contact us via fae@serpentinegalleries.org

[1] During a Creative AI panel discussion on the topic Aesthetics of New AI Leif Weatherby (NYU Digital Theory H-Lab) noted of AI, ‘It’s not just a black box, It’s at least grey. When you open that up you start to see things that have either aesthetic value, critical value, or both.’

[2] The Serpentine has a history of working in this practice-driven way across its programme, and importantly, not only as a feature of technologically orientated research. A key example of this is the community research undertaken as part of the Edgeware Road Project and the Centre for Possible Studies.

[3] Here we reference (within the humanities) the interdisciplinary work of thinker-tinkerers like Gilbert Simondon, who combined research as a media theorist with lab work where he experimented with computer components, taking machines apart and rebuilding them. Or (within the arts) we look to the studio and lab practices of artist-engineers like Roy Ascott and Rebecca Allen, to name a few. This method for working is of course not novel. We focus on it only to examine where this method is located – or more importantly, not located – in the museum.

[4] This is something Nora N. Khan has outlined in her participation with the Lab and in her essay, Towards a Poetics of Artificial Superintelligence: How Symbolic Language Can Help Us Grasp The Nature and Power of What is Coming, included in this Journal.

[5] Gilbert Simondon in his 1958 Du Mode D'existence des Object Technique writes, ‘Culture has become a system of defense against technics … based on the assumption that technical objects contain no human reality.’

[6] Keynote lecture at the newly opened Centre for Culture and Technology at the University of Southern Denmark.

[7] Stuart Hall, ‘Encoding/decoding,’ in Culture, Media, Language: Working Papers in Cultural Studies, 1972–1979, ed. Stuart Hall, Dorothy Hobson, Andrew Lowe and Paul Willis (London: Hutchinson, 1980), pp. 128–138.

[8] S. Bunz, 'The calculation of meaning: on the misunderstanding of new artificial intelligence as culture', Culture, Theory and Critique, 60 (3–4) (2019), pp. 264–78, https://doi.org/10.1080/14735784.2019.1667255

Download this article as PDF

Eva Jäger

Eva Jäger is Associate Curator of Arts Technologies at Serpentine. Together with Dr. Mercedes Bunz, she is Co-investigator of the Creative AI Lab, a collaboration between Serpentine R&D Platform and the Department of Digital Humanities, King’s College. She is also one half of the studio practice Legrand Jäger.

Mercedes Bunz is the Creative AI Lab's Principal Investigator and Senior Lecturer in Digital Society at the Department of Digital Humanities, King’s College London. Her research explores how digital technology transforms knowledge and power.

Alasdair Milne is a researcher at the Creative AI Lab and the recipient of the LAHP/AHRC-funded Collaborative Doctoral Award at King’s College London Department of Digital Humanities in collaboration with Serpentine’s R&D Platform. His work is broadly concerned with collaboration – how to comprehend practices of thinking and making that incorporate both the human and the non-human. His Ph.D will tackle creative AI as a medium in artistic and curatorial practices.

- Editorial: Curating, Biennials, and Artificial Intelligence

Joasia Krysa and Manuela Moscoso - Towards a Poetics Of Artificial Superintelligence: How Symbolic Language Can Help Us Grasp The Nature and Power of What is Coming

Nora N. Khan - MI3 (Machine Intelligence 3)

Suzanne Treister - A Visual Introduction To AI

Elvia Vasconcelos - Excavating AI: The Politics of Images in Machine Learning Training Sets

Kate Crawford and Trevor Paglen - Notes On A (Dis)continuous Surface

Murad Khan - Irresolvable Contradictions in Algorithmic Thought

Leonardo Impett - Creative AI Lab: The Back-End Environments of Art-Making

Eva Jäger - Creative AI Database

Serpentine R&D Platform & Kings College London - Research & Development at the Art Institution

Victoria Ivanova and Ben Vickers - Future Art Ecosystems (FAE): Strategies for an Art-Industrial Revolution

Serpentine R&D Platform & Rival Strategy - Curating Data: Infrastructures of Control and Affect … and Possible Beyonds

Magda Tyzlik-Carver - The Next Biennial Should be Curated by a Machine - A Research Proposition

Joasia Krysa and Leonardo Impett - Glossary